eBook

Chasing Real-time Data ROI: Strategies to boost streaming data quality and value

Read this ebook and see how Precisely can work with your organization to boost streaming data quality and value.

Streaming data quality

It’s well known that data is growing at an unprecedented pace, but consider these startling statistics from a Forbes article, all culminating in the year 2020:

- About 7 megabytes of new information will be created every second for every human being on the planet

- We will have more than 1 billion smartphone users globally

- There will be more than 50 billion smart connected devices in the world

- At least one third of all data will pass through the cloud

As the pace of digital transformation continues to accelerate, organizations are quickly realizing that “big data” is no longer the buzz word. In fact, managing large, complex data movements is growing more difficult with batch processing because of data’s immense scale. Those organizations that don’t keep up with the tech trends run the risk of being left behind.

To keep pace with data creation and glean faster insights than ever before, organizations are moving toward the power of streaming data to achieve critical objectives, improve the customer experience, optimize operations and decrease costs and inefficiencies. But organizations need a solution to effectively collect, store, broker and leverage data.

While the term “big data” was undeniably the buzzword mid-decade, the buzzword for the new decade is “streaming data.”

Among available options, the open source, distributed streaming platform Apache Kafka has quickly emerged as the leading choice among businesses. And with good reason—Kafka offers organizations a broad range of advantages, including:

High-throughput

This is simply how much data can be received, processed and sent in a given time period. Kafka was specifically built to handle high volumes of data in short timeframes—supporting message throughput of thousands of messages per second.

Reliability

Kafka duplicates data to guard against data loss, and continues to function even in the event of component failures. These distributed, partitioned, replicated and fault-tolerant features make it extremely reliable.

Low-latency

This is how quickly data can be sent through systems or networks. Kafka features low latency in the range of milliseconds.

Scalability

This is how well a system can handle additional work and volume. Kafka scales easily with no downtime or data loss.

Durability

This is how long data may be safely stored after creation. In Kafka, data is replicated and stored across distributed systems, meaning data can be safely stored in Kafka indefinitely.

ETL-like capabilities

An ETL pulls data from one or more sources, converts it to a unified format and loads it into a destination system. Because of its batch-handling capabilities, Kafka can perform the work of a traditional ETL.

Many organizations are making the investment in streaming data and event-driven architectures, but the relative advantages of Kafka can quickly be outweighed by the threat of poor data quality. Because speed and scale can’t prevent poor data quality from turning data assets into liabilities.

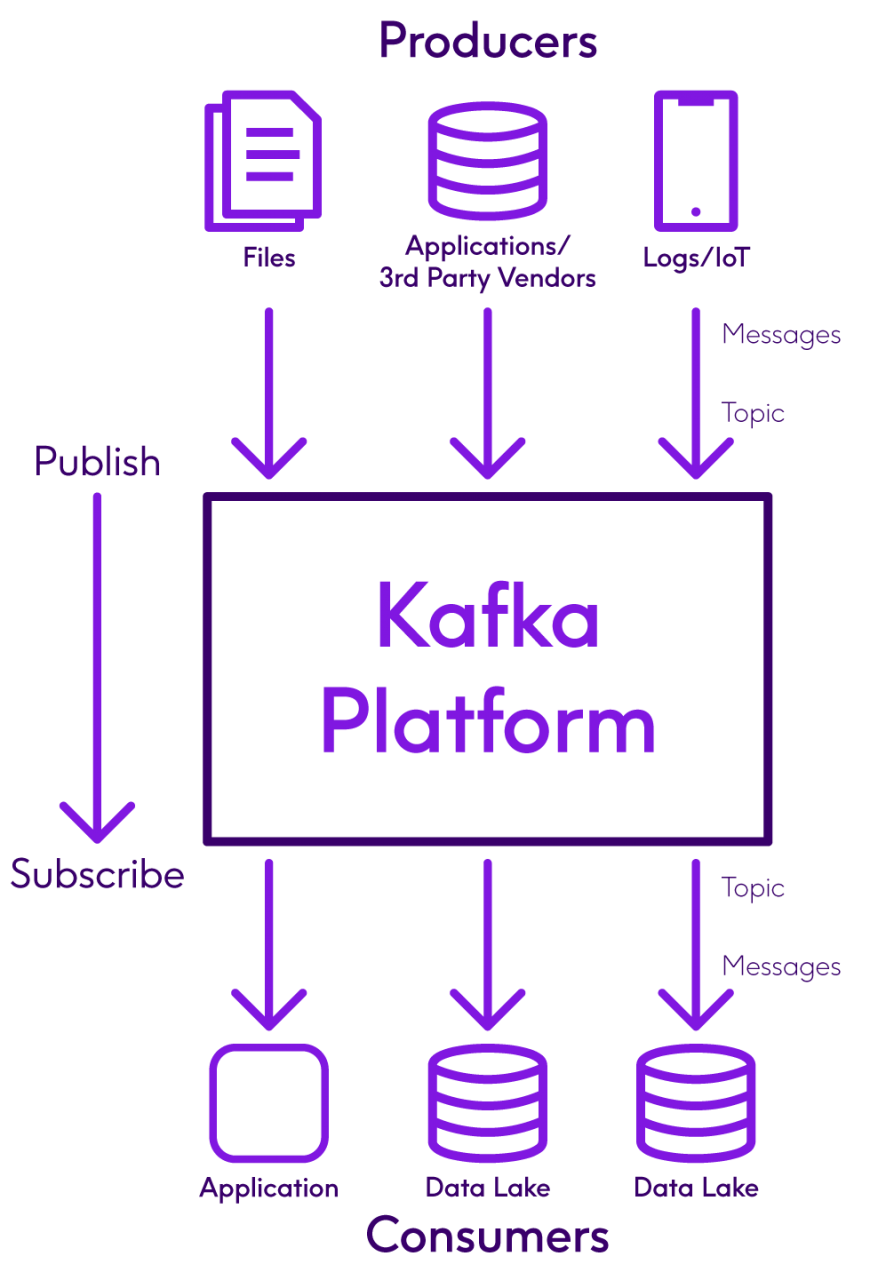

To reliably deliver quality data that consumers can trust, you need end- to-end data validation—from data producers publishing messages, to the data consumer who’ve subscribed to them.

To ensure consistent, complete and accurate data messaging, you need to assure data quality at every step of streaming data’s journey:

- Validate that all messages are sent from Producers

- Validate that all messages are received by Consumers

- Detect and eliminate duplicate messages

- Verify the timely arrival of every message

- Confirm that all messages were aggregated and transformed correctly

As discussed, more data doesn’t equate to better data. In fact, it’s the opposite. More data means more responsibility to ensure its quality. Below are 9 critical steps to ensure Kafka data quality:

- Establish data quality rules to confirm data integrity at the source.

- Perform in-line checks where real- time responses to data quality issues are required (potential fraud, compliance violations, negative customer experience).

- No matter if your Kafka framework features “no guarantee” or “exactly once/effectively once” semantics, you’ll want to eliminate message duplication.

- Confirm message receipt by every consumer.

- Reconcile data between producers and consumers to ensure that data hasn’t been altered, lost or corrupted.

- Conduct data quality checks to confirm expected data quality levels, completeness and conformity.

- Monitor data streams for expected message volumes and set thresholds.

- Establish workflows to route potential issues for investigation and resolution.

- Monitor timeliness to identify issues and ensure SLA compliance.

The potential of streaming data knows no borders, and its power can be used to improve operations across every industry. Below are just a few examples of how Kafka is used, and why streaming data quality is critical.

Financial services

Use case

- Multi-national financial services company streams thousands of daily financial transactions

Data Quality Solution

- In-line data quality validations to check completeness and accuracy

Insurance

Use case

- Leading auto insurer’s usage-based insurance (UBI) program collects data to assess risk and offer driver discounts

Data Quality Solution

- Data quality checks at consumption for conformity and completeness

Healthcare

Use case

- Major health system streams real-time data for better clinical decision-making, care coordination and outcomes

Data Quality Solution

- In-line checks for threshold violations; batch analysis for clinical insights

Consumer packaged goods

Use case

- Transactional data monitoring to identify customer experience anomalies

Data Quality Solution

- In-line checks and alerts for timeliness delays, threshold violations

Utilities & energy

Use case

- Analyze real-time smart meter and other data to predict outages

Data Quality Solution

- Batch analysis for accuracy and completeness

Manufacturing

Use case

- Inventory and supply chain

management

Data Quality Solution

- Reconciliation and balancing between producer and consumer data